How DevSecOps Closes the Chronic Conflict

“The need of the hour is twofold: to push both quality and security to the source. In today’s digital landscape, ensuring software quality and security from the very beginning is not just a best practice; it’s an imperative for safeguarding your applications and data”

Firstly, let us understand the 3 DevSecOps principles that help close the chronic conflicts between Development and Operations.

3 Principles of DevSecOps:

Flow, Feedback, and Continuous Learning

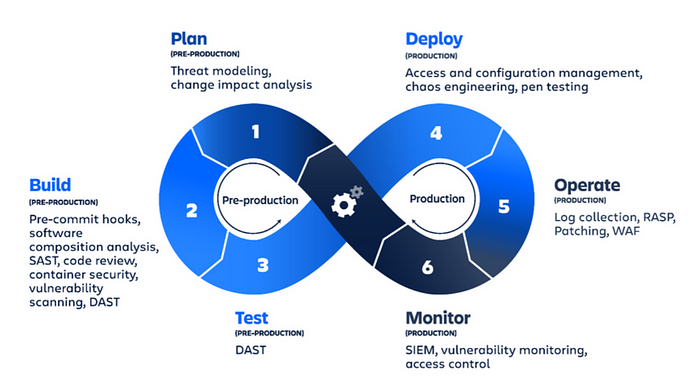

Flow: Flow emphasizes the smooth and efficient movement of work through the entire software delivery pipeline. In the context of DevSecOps, the focus is on integrating security practices into the flow of work

Fast Feedback: Feedback is about creating short feedback loops in the development process to detect and address issues as early as possible. DevSecOps promotes the gathering of security-related feedback throughout the software development lifecycle

Continuous Learning: Continuous learning emphasizes a culture of continuous improvement and learning from both successes and failures. In DevSecOps, continuous learning extends to security practices. Security teams and developers should continuously learn about emerging threats, vulnerabilities, and best practices.

A Brief Introduction:

How I see DevSecOps is as “A practice aimed at ensuring fast, resilient, and robust software by fostering a shift-left security mindset in the software development process to minimize the time to market while maximizing the software quality”.

More than just adopting the latest technologies, tools, and practices it must focus on the importance of outcomes.

Statistics:

There are several de facto rules and best practices in DevSecOps and you may follow what suits your requirement. Some of the key principles are mentioned below.

- Continuous Integration/Continuous Deployment (CI/CD)

- Infrastructure as Code (IaC)

- Vulnerability Scanning

- Compliance as Code

- Shift-Left Security

- Threat Modeling

- Incident Response Automation

- Monitoring and Logging

DevSecOps Implementation: Please continue reading the blog if you are interested to see how DevSecOps could be implemented with the help of tools and scripts to ensure the quality of the software is tested in the Continuous Integration pipeline itself.

Note: You can create a DevSecOps pipeline in many variants with multiple tools and technologies based on your requirements.

Scope:

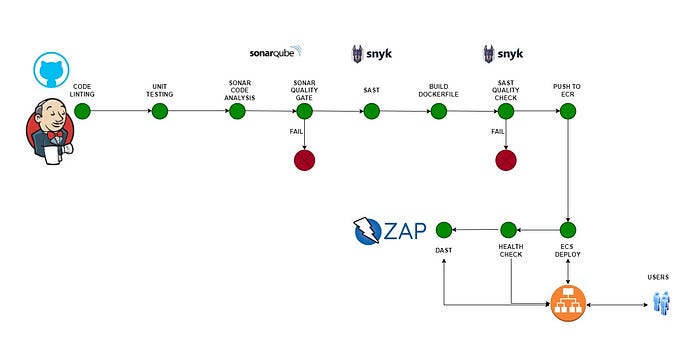

The developer Built a Python web application and checked-in the code to GitHub, the Jenkins Pipeline is triggered to build and deploy the web application to AWS ECS, ensuring the DevSecOps best practices and key principles are followed.

Pre-requisites:

Jenkins & SonarQube Installation in your Ubuntu Server: To Install Jenkins and SonarQube in the same server or a separate server, you can follow official documentation or an automated script.

AWS ECR Configuration: Follow this page, if you want to create an ECR and push the images to the ECR.

Snyk Configuration: Since it is an enterprise tool, follow the official document. Contact me if you need any inputs about Snyk Tool integrations.

ECS Creation: Create an ECS cluster, follow this page to create an ECS. ECS is used as the medium to orchestrate the containerized application.

DAST Tool: Here , I have used ZAP to validate the URL where the application is deployed. To know about ZAP , its implementation, visit this page.

DevSecOps Pipeline Architecture

The below DevOps setup could be achieved if you are following the prerequisites and tools integrations appropriately.

App Code Repo: Here the application is a simple Python web application similar to a Hello World application.

Jenkins Plugins: AWS Credentials, Pipeline: AWS Steps, Snyk Security, Sonar Quality Gates Plugin, SonarQube Scanner, Amazon ECR plugin, HTML Publisher,Credentials, Docker, Docker Pipeline, GitHub,

SonarQube Integration: Please go through this blog for the SonarQube integration steps.

DevSecOps Repo: The pipeline that would help you to execute all the required stages. Snippet of the pipeline is shown below , if you are interested to try these stages, contact me for more details.

pipeline {

agent any

environment {

// Define the environment variables that you need to pass in this block of code

// This could be called anywhere in the stages of the pipeline using ${VARIABLE NAME}

<variable name> = '<value>'

AWS_DEFAULT_REGION = 'eu-west-2'

TASK_DEFINITION_FILE = 'task-definition.json'

}

parameters {

# the parameters that you want the pipeline to be executed. You can include as many as you wish.

string defaultValue: '', name: 'dev_repo'

}

stages{

stage('Authenticate Docker with ECR') {

// this stage may not be required, based on your existing architecture.

// Only required , if you want to execute the aws commands and also while pushing images to ECR.

steps {

script {

withCredentials([[$class: 'AmazonWebServicesCredentialsBinding', credentialsId: 'aws', accessKeyVariable: 'AWS_ACCESS_KEY_ID', secretKeyVariable: 'AWS_SECRET_ACCESS_KEY']]) {

awsCliCmd = "aws ecr get-login-password --region ${AWS_DEFAULT_REGION}"

dockerLoginCmd = "docker login --username AWS --password-stdin ${ECR}"

authCmd = "${awsCliCmd} | ${dockerLoginCmd}"

echo '${authCmd}'

sh authCmd

}

}

}

}

stage("Code Linting") {

steps {

echo "Cloning the code repository"

dir("<your dir choice>"){

git branch: 'main', credentialsId: '<credential Name>', url: '${GITREPO}'

script {

// you can have separate linting groovy scripts or it could be done in specific dev repo

if (params.language == "python") {

sh 'pylint --output-format=text --output codelintreport.txt *.py || true'

sh 'echo "The Linting report is ready"'

sh 'cat codelintreport.txt'

}

else {

sh 'echo "write your linting cases and run it"'

}

}

}

}

}

stage("Unit Testing ") {

steps {

dir(""){

script {

if (params.language == "python") {

sh 'python3 -m unittest test_app.py'

}

else {

sh 'echo "write your test case and run it"'

}

}

}

}

}

stage('SonarQube Code Analysis') {

steps {

dir(""){

// Run SonarQube analysis for Python

// sonar-project.properties is checked into the dev code repo

script {

def scannerHome = tool name: 'sonarqube-scanner', type: 'hudson.plugins.sonar.SonarRunnerInstallation'

withSonarQubeEnv('sonar') {

sh "${scannerHome}/bin/sonar-scanner"

}

}

}

}

}

stage("SonarQube Quality Gate Check") {

// this stage is to ensure the quality gates are in place

// The pipeline succeed only if the quality gate is Passed.

steps {

script {

def qualityGate = waitForQualityGate()

if (qualityGate.status != 'OK') {

echo "${qualityGate.status}"

error "Quality Gate failed: ${qualityGateStatus}"

}

else {

echo "${qualityGate.status}"

echo "SonarQube Quality Gates Passed"

}

}

}

}

stage("SAST"){

steps {

// SNYK - ENTERPRISE TOOL stages won't be shared here.

}

stage('Containerization') {

steps {

// To clone the application code (with Dockerfile)

script {

image = "${ECR}/${params.ecr_repo}:${env.BUILD_ID}"

echo "image to be built is : ${image}"

customImage = docker.build("${ECR}/${params.ecr_repo}:${env.BUILD_ID}", "<dirname>")

}

}

}

stage('SAST - Snyk Security Scan') {

steps {

script {

def image = "${ECR}/${params.ecr_repo}:${env.BUILD_ID}"

echo "${image}"

try {

// SNYK - STEPS

} catch(Exception e) {

// this is to ensure the default snyk failure wont impact the jenkins pipeline status

echo "Error occurred during container test: ${e.message}"

}

}

}

}

stage("Snyk Quality Gates"){

steps {

script {

// CUSTOM QUALITY GATES TO BE WRITTEN

}

}

stage('Snyk Base Image Vulnerability Check') {

// This is an additional level of security

// sometimes , dev team may change the base images in dockerfile/it could

// have zero-day vulnerabilities, to ensure this is tracked, the built images's base image is also scanned.

}

stage('Manual Judgment') {

// this is a manual validation as we want to ensure there are no vulnerabilities are overlooked.

steps {

script {

// timeout(time: 1, unit: 'HOURS')d

userInput = input(

message: 'Please check the vulnerabilities report, Do you want to proceed pushing the images to ECR ?',

ok: 'Proceed'

)

}

}

}

stage('Push Image to ECR') {

steps {

script {

docker.withRegistry("", "") {

echo "Push images to ECR"

customImage.push()

echo "Deploying the image to the ECS begins:"

echo "${image} is going to be deployed to the ECS "

}

}

}

}

// This stage is required to extract the credentials and do an aws configure for the deployment

stage('ECS Deployment') {

steps {

script {

// This is to register the task definition in ECS

// ECS is created and the task definition is also in place.

// The task-definition.json would contain the necessary details.

// The image that is getting built would be replaced in the task definition

sh """

jq '.containerDefinitions[0].image = "${image}"' task-definition.json > newtaskDefinition.json

mv newtaskDefinition.json task-definition.json

cat task-definition.json

"""

def taskDefinition = readFile(file: TASK_DEFINITION_FILE).trim()

def registerOutput = sh(script: "aws ecs register-task-definition --cli-input-json '${taskDefinition}'", returnStdout: true).trim()

// Generate the taskdefinition name into the variable and pass it to the update service

def taskdefinitionname = sh(script: "echo '${registerOutput}' | jq -r '.taskDefinition.taskDefinitionArn'", returnStdout: true).trim()

// Update service in ECS which would do rolling deployment

sh """

echo "this is testing deployment"

echo "${taskdefinitionname}"

aws ecs update-service \

--cluster <cluster name> \

--service <service name> \

--task-definition ${taskdefinitionname}

"""

}

}

}

stage("Application Health Check") {

steps {

script {

// It depends on your architecture

// if you need any details in the ECS ELB healthcheck to be done from Jenkins, contact me

}

}

}

}

post {

always {

// any post pipeline run stages could be added

// report generation , successor job trigger , etc

}

}“Security isn’t a solo act; it’s an ensemble performance”

Thanks for reading.